Decentralizing Verification: Finding Trust In a Trustless World

Why bottom-up approaches to moderation will outperform centrally-planned ones

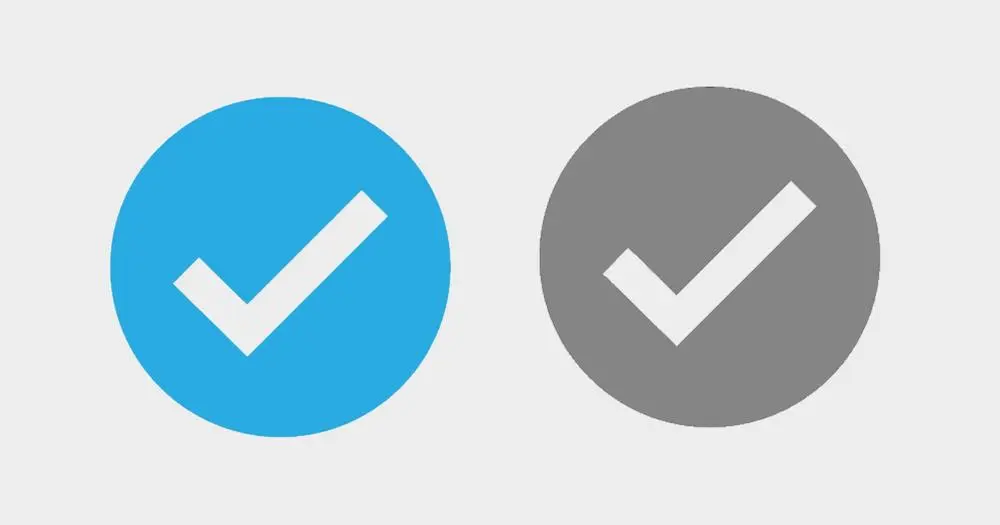

With Twitter, a single entity is responsible for doling out "blue checkmarks" to users based on certain criteria. Until recently, the process was fairly opaque, and highly-subjective, with many accounts of employees taking bribes of up to $15,000 for those sweet, sweet checkmarks.

Elon tried to shake things up by simply charging $8 per month to give anyone a blue checkmark. But this quickly resulted in scam impersonator accounts fooling people into thinking that they were legitimate when they were actually just paying $8.

At the root of the issue is the fact that Twitter is the sole entity in charge of doling out checkmarks-- the checkmark czar, if you will. But what if we could engineer a more decentralized system? One that relied on the entire network's ability to curate, rather than just one company?

With DeSo, all content is stored on-chain, and anyone can run their own feed that curates the content however they see fit. Every profile, post, follow, comment, like, etc... is fully open for anyone to build off of, as a globally-accessible and permissionless firehose of content. This gave us an opportunity to re-imagine how trust works, and to develop a novel verification system that is not only more fair, but that also encourages people to launch their own apps and feeds as well.

How It Works

Imagine, for a moment, that anyone using any app on the DeSo blockchain has the ability to verify another user. When they do this, they create an association on-chain between themselves and the user they are verifying, but we don't give anybody any checkmarks yet. We don't need to get into the details of how this is implemented yet-- instead, just assume for now that you can query the blockchain's database to look up the following whenever you want:

- Users you've verified. For a particular user, fetch all the users who this user has verified.

- Users who have verified you. For a particular user, fetch all the users who have verified this user.

By allowing this capability, the blockchain starts to develop a graph of trust between all of the users on the network. This is very valuable because it makes it so that every user's curation activity can feed into our verification scheme.

Now, the next step is to determine how we assign checkmarks to users, and what these checkmarks actually look like. Again, because DeSo allows anyone to run their own app off of the firehose of content, we get a bit of a superpower in terms of being able to decentralize the decisions around who to trust.

In particular, each DeSo app can select a subset of users whose verifications they will honor with holy checkmark in their particular app. For example, Diamond could choose one set of users to honor verifications for while Pearl or Desofy could choose a wholly unrelated set of users to honor verifications for. For example, Diamond could choose to assign checkmarks to verifications made by @Krassenstein, @WhaleSharkETH, and @ItsAditya while Pearl could choose to assign checkmarks to verifications made by @Goldberry, @VishalGulia, and @amandajohnstone (or any other subset of users).

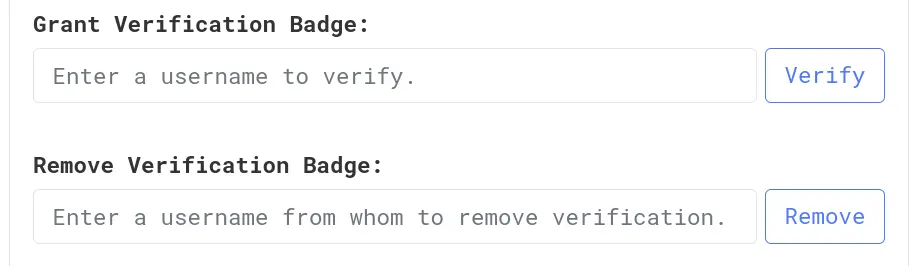

This would be as simple as specifying a list of usernames in a DeSo node's admin panel as trusted verifiers (note we use the term node and app interchangeably, since every DeSo app typically also runs a DeSo node, which gives them a full copy of all the content on the network and the ability to configure various parameters for their feeds). And if anyone becomes a little too liberal with the checkmarks, they can simply be removed from the list in the admin panel, thus doing away with every checkmark they've granted in that particular app's UI. Their verification associations will still exist on-chain, but they will not be honored by the app's UI (though they can still be honored by others).

Below is what the old verification mechanic looked like in a node's admin panel, soon to be replaced with a comma-separated list of trusted verifier usernames:

In addition to specifying who the trusted verifiers are for a particular node, node operators can also specify that they want verifications to go N levels deep. For example, setting verifications to go two levels deep would mean that you get a checkmark in the app's UI as long as either a trusted verifier verified you OR a trusted verifier verified someone who then verified you (two levels). This would allow for more checkmarks to be given out without too much overhead, and with minimal dilution in quality.

Lastly, as an added design component, a checkmark can be substituted for a small little badge containing the profile pic of the trusted verifier who verified you. Or, if multiple people have verified you, a small list of badges. Users can hover over these badges and see who each person is, and how much they trust them. Whether to use a checkmark or list of pics can be left to the node operator to configure in the admin panel with a single click.

The Growth Implications

Although it seems like a small change at first, allowing node operators to delegate verifications to the users of each node can provide a powerful incentive for people to run nodes/feeds in the first place.

In particular, the added incentive to either become a trusted verifier or to be verified in general gives new nodes an edge in terms of bringing users in. For example, people who were not selected as trusted verifiers on other nodes may flock to new nodes that bestow that honor upon them. And the same goes for people who are seeking verification on existing nodes as well.

Additionally, it incentivizes diversification when it comes to types of content as well. For example, someone with a large network of professional athletes can spin up a node where only professional athletes are trusted verifiers. Another person with a large network in politics can spin up a node where only politicians are trusted verifiers.

The ability to selectively empower a distinct subset of users on each frontend/app that spins up can heavily influence the content that becomes popular on that particular app.

Decentralizing Moderation and Curation

Until now, we've discussed selecting a set of users to act as trusted verifiers. But there is no reason why the delegation needs to stop there.

In particular, all nodes also maintain a set of local hot feed parameters and a local blacklist that allows for node-level content curation. And the idea would be to push these parameters down to the user level as well, and store all of these parameters on-chain as associations as well, so that any app can immediately leverage them.

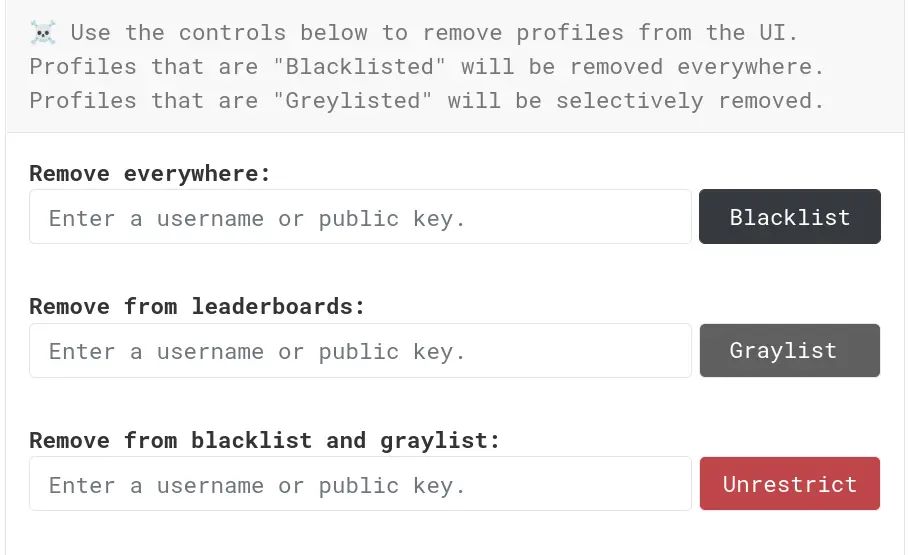

For example, if someone is being extremely toxic, a particular node can greylist or blacklist the user, which only impacts that user on the particular node where they were greylisted/blacklisted. Today, being greylisted means users no longer show up in search results or leaderboards, while being blacklisted results in their profile being undiscoverable on a particular node.

There is no reason why this can't also be moved from the node level to the user level in the same way that we're doing for verifications. Namely, node operators can provide a comma-separated list of trusted moderator usernames that are allowed to greylist/blacklist people as they see fit.

Below is what the greylist/blacklist UI looks like today in a node's admin panel, to be replaced with a comma-separated list of trusted moderator usernames:

Similarly, every node comes with a default hot feed with adjustable parameters at a user level. For example, if someone is really awesome, the user-level multiplier for that user can be increased in order to make their posts score higher when computing that node's hot feed. Similar to verifications and blacklisting, this power can also be moved from a node-level to a user level. In particular, node operators can specify a comma-separated list of trusted curator usernames who are allowed to modify the hot feed multipliers for users and their posts.

Below is a snippet of the current hot feed adjustment UI in a node's admin panel, to be replaced with a comma-separated list of trusted curator usernames:

Importantly, every time a user adjusts a greylist/blacklist or adjusts a hot feed parameter for another user, this information can be stored directly on-chain as an association that any other app can tap into. This is a big deal because it means that, with very little effort, someone can spin up a new node that leverages all prior moderation and curation decisions but with a few twists that the node operator deems fit (e.g. modifying the various trusted moderator usernames or trusted curator usernames). It also means that all moderation and curation decisions can be made in a totally transparent fashion, which presents a stark contrast to the black-box approach taken by today's social media companies.

User-Level Moderation and Curation

The core concept behind DeSo is that putting all content on-chain moves us from a world where a single company controls a social network to one where thousands of nodes/apps can build permissionlessly off of the same open firehose of content. But the concepts described in this post take things one step further...

In particular, we are moving decisions about moderation and curation from the node level or app level down to the user level. Moreover, by storing all of the moderation decisions on-chain, we are making it so that every app on the network is strengthening every other app constantly. The moderation decisions made on Diamond, for example, can immediately be leveraged by Pearl or Desofy, and vice versa.

Parting Thoughts: The Power of Decentralization

Fundamentally, DeSo's thesis is that centrally-planned networks like Twitter are failing to leverage the full power and ingenuity of their user-base and developer-base when it comes to both the curation of content and the moderation of content.

By creating a network where anyone can verify anyone and where anyone can moderate anything, we are not only unlocking the previously-unharnessed power of the masses, but we are also creating a system where competition and choice can flourish in a way they haven't been able to in the past. A world where no one corporate entity controls moderation, but rather thousands of independent apps offer their own feeds and work together to provide a previously-unimagined user experience.

PS: Associations are launching in a few weeks! This is the on-chain upgrade that's going to make this all possible. If you'd like to learn more about it, check out our draft spec here.